Hello everyone! today I'm going to be talking about everything related to SSTables and how popular databases such as Cassandra utilize them to achieve high data operation performance and throughput. We'll bump into different side topics here and there but I'll make sure to explain as much as I can so without further or do let's get started!

Foundation

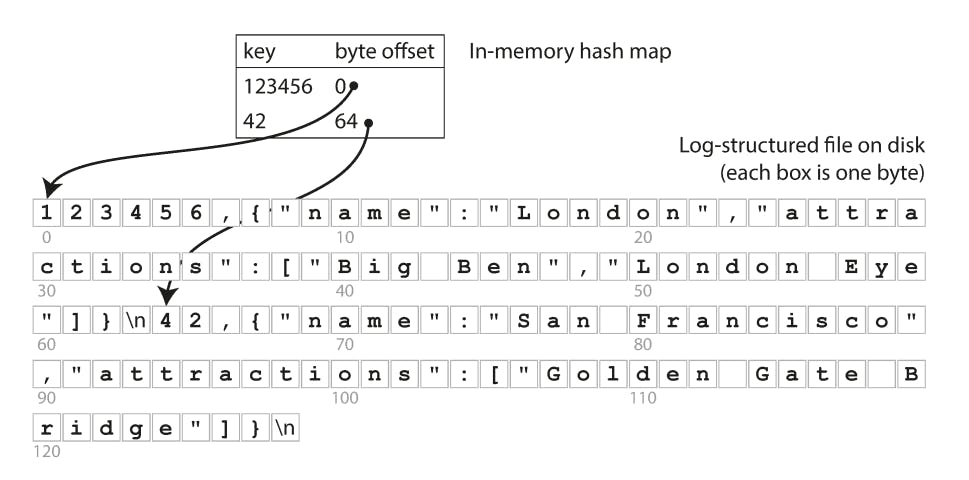

So before diving into SStables let's talk generally about databases. The simplest form of a database is just a file storing different keys and their respective values which is also called log-structured files

To find keys in this file you can for example have an index in memory that has all the keys and their respective offsets in the file. It may look like this

Now this is very simple to implement and is as fast as it can get since it only appends to the end of the file but has different problems;

Since the whole index is in memory if a crash occurs the index is gone and you'll be required to build the index again depending on the file size this could be a tedious operation. Some databases recover from this by taking snapshots of the index and saving them to disk.

Very bad for range queries since keys are not sorted in any way.

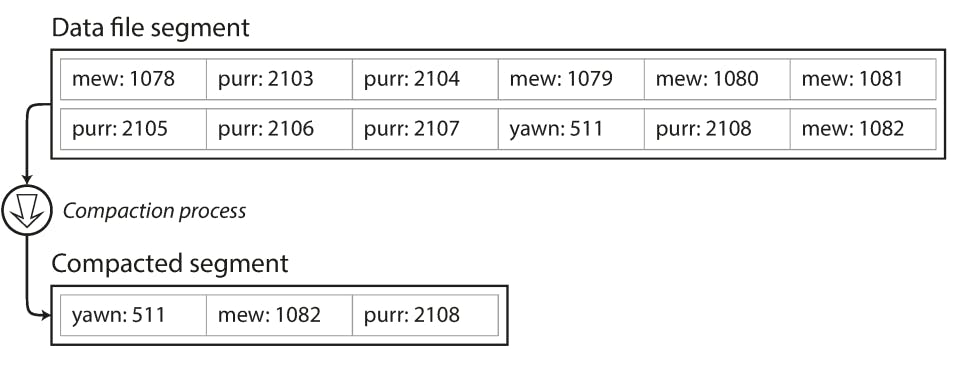

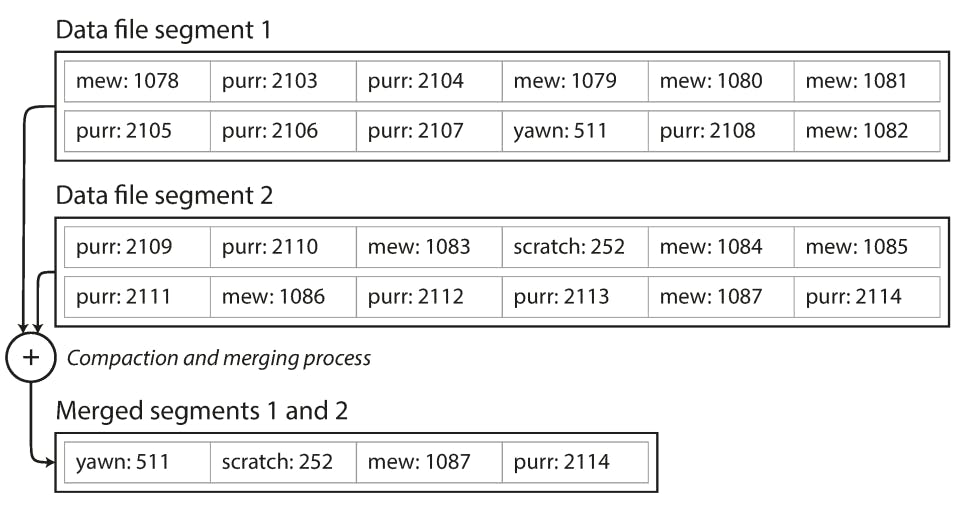

Very space inefficient, a solution to this is to cap the size of the file and freeze it after the cap is met. Then start writing to a new file. Then in the background, a compaction and merging process is done

Since we append only, Updates are just inserts in the log file with the new value and the same key. After some time merging is done to remove any duplicate keys taking the latest value inserted for each key. The merging keeps the file count small.

A downside is we have to have an index in memory for each file created. These indices are called Hash indices.

The problems discussed now leads us to what is now called SSTables

SSTables

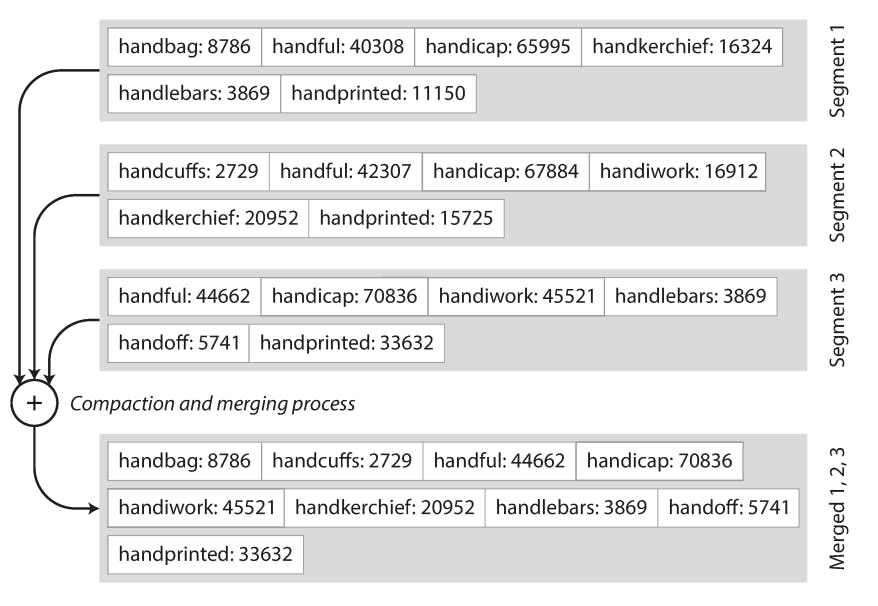

SS stands for Sorted-String Tables. They are segment files stored on disk where every key is in sorted order. Bare with me we'll get to how it gets sorted when storing it but since all keys are sorted. Merging becomes easier since we can use part of the mergesort algorithm to join both files and make a single sorted segment file.

Another bonus of doing this is that we don't need to store all the keys in our Hash index as we did previously. What we can do is have something called a sparse index meaning we have an index that stores some of the keys not all. So for example we store handbag and handprinted from the figure above and when searching for handicap we know it's between handbag and handprinted so we scan this area only.

This saves alot of space in memory and allows for efficient querying. As well as getting rid of the range query problem as now the keys are sorted we can easily do range queries.

Now How does the segment file get sorted in the first place since the files are append only?

This part is one of the main reasons many databases such as Cassandra have very high performance in reads and writes. Let's get to it.

Firstly when a write process happens, the data isn't directly stored on disk. It gets written in memory in a certain data structure that allows adding different values and returns them in sorted order. Data structures that do this are AVL Trees and Red Black Trees which are a type of binary search trees but they are always self-balanced which gives them an efficiency upper edge maintaining the time complexity for insertion, deletion and search of O(logn).

The data structure is called a memtable. Once the memtable exceeds a certain size it is then flushed to disk to a segment file.

In the background from time-to-time these segment files go through merging and compaction where the last value for each key is taken and values marked as deleted will be removed (tombstone operation). All this while the memtable keeps accepting new writes.

Since log-structured files are append-only. Deleting records means we mark the key we want to delete and append that to the log file so when merging occurs we know that this key got deleted.

- When a read request comes in, we first check the memtable then proceed to scan every segment from recent to old. That is if we didn't use a hash index.

One problem we will face during scanning through every segment is what if we are searching for a key that doesn't even exist in the segments? that would be a major problem as we'd have to scan every file in order to return nothing which is unnecessary work.

A way to fix this is by using Bloom Filters

Bloom filters

A Bloom filter is a space-efficient probabilistic data structure that is used to test whether an element is a member of a set. It uses the concept of hashing to check if a key belongs to a file or not. Since it's probabilistic it can give False Positive results which means sometimes it can tell you that a key exists in this file when it doesn't. It never gives false negative results.

Bloom filter uses binary 1s and 0s to dictate wether a key belongs to a file or not. Inserts in the bloom filter work as follows:

We create an array of bits of size n (The required size of the bloom filter)

The key inserted passes through k hashing functions and then we mod the result by the size of the bloom filter. This will give us k indcies.

We proceed to set the bits of these indcies to 1 in our bloom filter.

The search process does the same thing; hashing the key and checking if the k indcies have the bit set to 1. If all of them are set to 1 then we say that the key might be in this segment. If one of the indcies has 0 then we are sure that the key is not in this segment

Summary

In this blog, we delve into the concept of SSTables (Sorted String Tables) and their significance in achieving high-performance data operations in popular databases like Cassandra. We begin by discussing the challenges faced by simple log-structured files, such as the lack of sorting, memory-intensive indexing, and space inefficiency.

SSTables offer a solution to these issues. They are segment files stored on disk where keys are sorted in ascending order, facilitating efficient merging and range queries. With SSTables, the need for an in-memory hash index for each file is eliminated, replaced by a sparse index that stores only a subset of keys, optimizing memory usage and query efficiency.

We then explore how SSTables achieve their sorted order despite being append-only files. Data is initially stored in memory within a data structure called a memtable, which uses self-balancing binary search trees like AVL Trees or Red Black Trees. When the memtable exceeds a certain size, it is flushed to disk as a segment file. Periodic merging and compaction processes remove duplicates and tombstone operations (marking deleted records).

For read operations, the memtable is checked first, followed by scanning the segments from most recent to oldest. To optimize this process and avoid unnecessary scans, Bloom Filters are employed. Bloom Filters are probabilistic data structures that use hashing to determine if a key might exist in a file. While they can produce false positives, they never produce false negatives.

In summary, SSTables provide an efficient and organized approach to data storage, offering benefits like sorted keys, reduced memory usage, and improved query performance. Combined with the use of memtables, merging, compaction, and Bloom Filters, SSTables enable databases to achieve high read and write performance while ensuring data integrity and space efficiency.