Introduction

Hello everyone! In this article, I'm going to be walking through the basic steps to get an AWS lambda function up and running using Terraform. We will also watch for source code changes and redeploy the lambda function accordingly. Let's get started!

We'll be coding the lambda function using Go but you can really do any language you want.

Deploying AWS Lambda Using Terraform

First off let's define our Terraform providers

Defining Our Providers

# providers.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "4.55.0"

}

null = {

source = "hashicorp/null"

}

archive = {

source = "hashicorp/archive"

}

local = {

source = "hashicorp/local"

}

}

required_version = ">= 1.3.7"

}

provider "aws" {

access_key = "access-key"

secret_key = "secret-keyeb"

region = "eu-central-1"

}

We use 4 providers:

AWS provider for terraform that allows us to provision resources on AWS (our lambda function).

Null provider which provides resources that do nothing 😂, We'll use this to watch for code changes as a trigger and execute a command that rebuilds our code. More on this provider here

The Archive provider allows us to package our compiled Go code to use as the lambda function. There are several ways to upload your code whether it's uploading them on S3 and pulling from there directly or just uploading a ZIP file containing your source code directly. The archive provider allows us to ZIP our code Anyway. More on Archive provider here

The local provider allows us to point to local files on our host. We are going to watch the changes to this file by checking its hashed value every time we do

terraform apply. More on the local provider here

AWS Lambda and IAM

We're going to define a role for our lambda function. The policies for this role are anything that we'd need to access from within our lambda function

For example, if we work with S3 within our function we'll need to add policies to allow lambda to access AWS S3. Since there's really nothing we're doing we're going to be giving it access to CloudWatch so it can log different events there.

resource "aws_iam_policy" "lambda_logging" {

name = "LambdaLogging"

path = "/"

description = "IAM policy for logging from a lambda"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "arn:aws:logs:*:*:*",

"Effect": "Allow"

}

]

}

EOF

}

resource "aws_iam_role" "lambda" {

name = "lambda"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

EOF

}

resource "aws_iam_role_policy_attachment" "lambda_logging" {

role = aws_iam_role.lambda.name

policy_arn = aws_iam_policy.lambda_logging.arn

}

From the code above, only the Lambda service can assume this role and when it does, it only has access to "logs:CreateLogGroup", "logs:CreateLogStream", "logs:PutLogEvents"

Defining our Variables

We'll define a couple of variables that will help us when we come to the deployment steps.

# variables.tf

locals {

function_name = "free-palestine"

src_path = "${path.module}/lambda/${local.function_name}/main.go"

binary_name = local.function_name

binary_path = "${path.module}/tf_generated/${local.binary_name}"

archive_path = "${path.module}/tf_generated/${local.function_name}.zip"

}

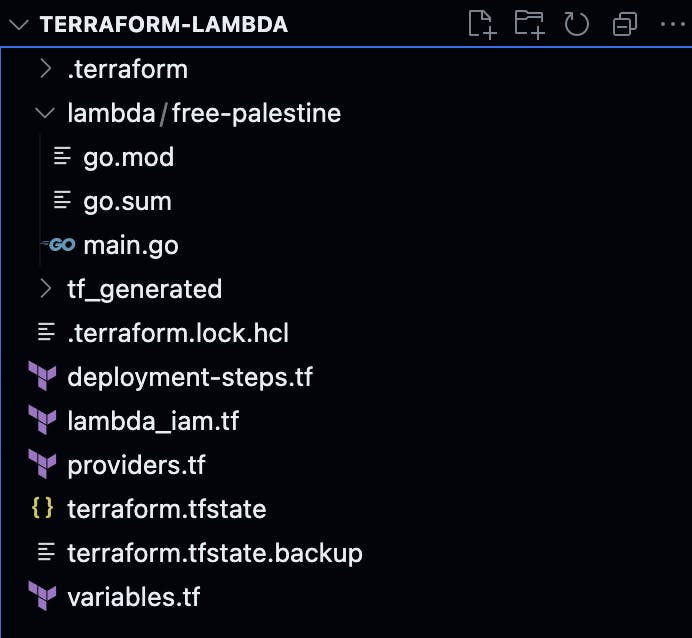

To understand this better, here is my directory structure.

Inside lambda/free-palestine we have a normal go module created that has a main.go file containing the lambda function source code.

// main.go

package main

import (

"context"

"fmt"

"github.com/aws/aws-lambda-go/lambda"

)

func HandleRequest(ctx context.Context, event interface{}) (string, error) {

fmt.Println("event", event)

return "Free Palestine ", nil

}

func main() {

lambda.Start(HandleRequest)

}

When we build the go binary and zip it we store these artifacts inside the tf generated directory.

Deploying the lambda function

Now all we need to do is the following:

Build the Go binary

ZIP it

Create a lambda function resource and pass the ZIP file to it.

Lets break this into 3 bits of code for more clarity.

# deployment.tf

data "local_file" "lambda_source" {

filename = "${path.module}/lambda/free-palestine/main.go"

}

resource "null_resource" "binary_file" {

triggers = {

source_code_hash = data.local_file.lambda_source.content_base64sha256

}

provisioner "local-exec" {

command = "GOOS=linux GOARCH=amd64 go build -o ${local.binary_path} ${local.src_path}"

}

}

We used the local provisioner to invoke the local_file resource which points to our source code file that we wish to monitor for changes.

Then in the null_resource we add a trigger where the local-exec will invoke every time the source code hash changes (i.e every time we make a change to our main.go)

Then we proceed with the command that creates a binary executable file from our Go code. We specify the Operating system and architecture and specify where the source code is and the output path.

# deployment.tf

data "archive_file" "function_archive" {

type = "zip"

source_file = local.binary_path

output_path = local.archive_path

depends_on = [null_resource.binary_file]

}

After compiling our code into a binary. We proceed to ZIP it providing the source_file required to be ZIPPED.

# deployment.tf

resource "aws_lambda_function" "function" {

function_name = "free-palestine"

description = "🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸🇵🇸"

role = aws_iam_role.lambda.arn

handler = local.binary_name

memory_size = 128

filename = local.archive_path

source_code_hash = data.archive_file.function_archive.output_base64sha256

runtime = "go1.x"

}

Now we use the lambda resource, providing a function name and description, the role we created earlier, a handler that can be the same as the function name and memory size in Megabytes.

Then we give it the ZIP file and the runtime which is go in our case.

Finally (optional) we create a cloudwatch log group that stores the logs of our lambda function for tracking and debugging, etc.

resource "aws_cloudwatch_log_group" "log_group" {

name = "/aws/lambda/${aws_lambda_function.function.function_name}"

retention_in_days = 7

}

Apply the following using terraform apply --auto-approve

Now using this CLI command we can check if our lambda function works or not.

aws lambda invoke --function-name free-palestine output_file

After invoking if we cat the output file cat output_file We should see Free Palestine as the output 🎉

Cleanup

Now each time we change our main.go file and do terraform apply the function should change accordingly.

Last but not least don't forget to do terraform destroy to clean up all resources.

That's been it for this article. See you in the next one!